We are a group of creative people who help organizations make their ideas beautiful.

I Used AI to Do My Job, and it Was Actually a Lot of Work

Several months ago, publicly available AI tools were coming online and the agency was starting to talk about them with a mix of awe and panic. Around the same time, an idea got tossed around for putting their creative potential (and potential limitations) to the test: What if we redesigned and rewrote our outdated agency website with the aid of artificial intelligence?

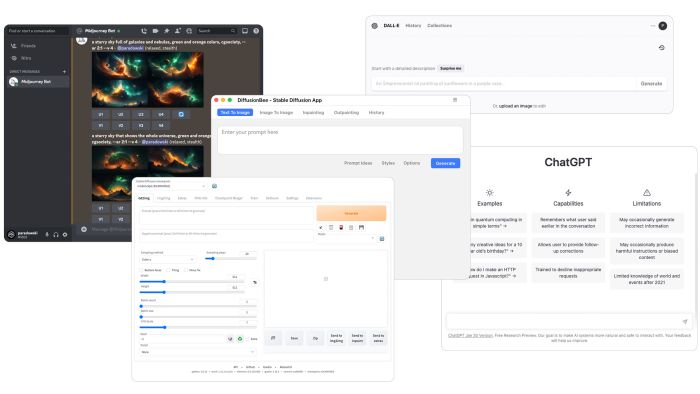

A small group, including AI lead Ryan Hogard, got together to generate the ultimate agency website using a mix of tools including GPT-3, MidJourney, DALL·E 2, and Stable Diffusion (DiffusionBee and AUTOMATIC1111/stable-diffusion-webui).

Having read a lot of criticism about AI models being trained to ape artists’ styles, often with work they didn’t consent to share, I was skeptical. And the tools weren’t intuitive to access, or to use. I spent a lot of time researching prompt engineering, poring through message boards, and testing which style of prompt worked best for which library. What works for Midjourney, for example, might not for Stable Diffusion, or DALL·E 2. And what worked in Stable Diffusion 1.5 might generate drastically different results in Stable Diffusion 2.0.

The technology was advancing faster than the project.

Luckily there are whole sites dedicated to crafting better prompts — even AI for prompt-writing! — and soon enough, I was hooked.

Interiors and employees generated with DALL-E using prompts like "photo of the interior of a trendy marketing agency with industrial aesthetic neon sign and a pool table" and "graphic designer with tattoos wearing spectacles standing against a brick wall with arms folded."

The process was slightly different for developing each of our two layouts. We began by using AI for initial concept exploration. Then, we treated each layout as an independent branding opportunity using the results of our experimentation, combined with traditional design thinking.

We personalized our layouts using a combination of AI-generated interiors and employee portraits. Our most successful generators were MidJourney and DALL·E 2. All designs included a mix of images from these generators. While Stable Diffusion also contributed, it required additional guidance to produce usable images. Below is a breakdown of how we created each with the help of AI, from wireframes to faux photography:

Layout 1:

Concrete Jungle

After lots of trial and error, we went through 100+ experimental prompt generations to find a style that worked together. We combined the renders into a traditional collage for the web user experience. After these steps, the rest of the branding and layout was treated as a more conventional branding/web project.

Concepting

1

We used all three primary image generation tools during our journey to select the right visual direction. We first utilized MidJourney, then DALL·E 2 to experiment with various layout options. Finally, Stable Diffusion helped us generate multiple layout options for further consideration. Ultimately, we relied on MidJourney for the majority of our visuals.

2

MidJourney Prompt: a concrete underground station at night, with nature in the background, olafur eliason, thomas wilfred, the designers republic, spin design agency, extreme detail tritone experimental flat graphics --ar 5:7 --no blur dof bokeh distortion --iw 1.25 --q 2

3

We selected these branding themes to help guide our process: Spiraling Pattern, Exploration & Sci-fi Book Covers.

Spiraling Pattern, Exploration & Sci-fi Book Covers

4

We selected a color palette from AI-generated exports using Extract theme in Adobe Color.

Layout

5

Building on the branding themes and concept experimentation, the layout was a collage of AI-generated textures and forms, blending traditional design principles with AI.

6

Inspired by science fiction book covers, the typography was carefully selected to match the overall design aesthetic.

7

We revisited DALL·E 2 to use the outpainting tool to adjust layout for all screen sizes.

Development

8

Added parallax to add depth to create a more immersive experience for the user.

Layout 2:

Bead Maze

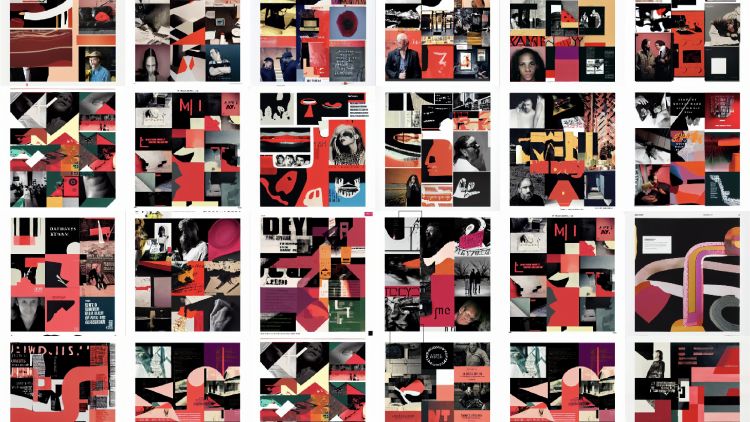

DALL·E 2's purely generated art served as a flexible blueprint for our branding (and ultimately our web layout), allowing us to experiment and create a visual direction that captured our interest.

Concepting

1

In order to expand on the artboard, we utilized Outpainting in DALL·E 2. This allowed us to upload to Stable Diffusion and generate a wider range of layout options. After careful consideration, we made a visual direction selection by keeping what worked and discarding the rest.

2

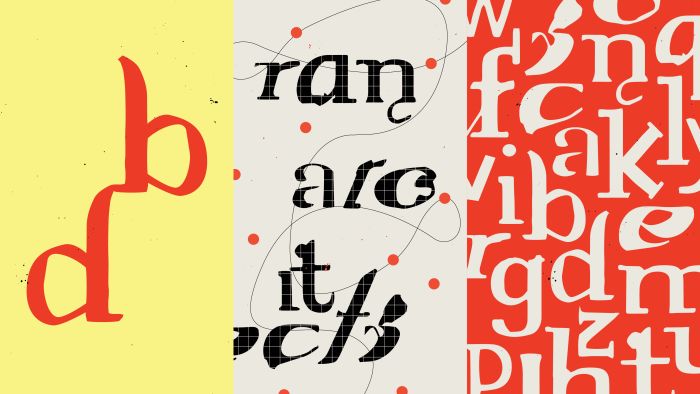

DALL·E 2 Prompt: typography, layout design, graphic design, deconstructed, april greiman | katherine mccoy | david carson | jefferey keedy | james victore | tibor kalman | neville brody

3

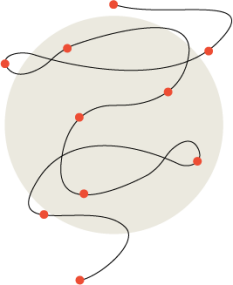

We selected these branding themes to help guide our process: Pattern, Connecting Threads & Irregular Letter Forms.

Pattern, Connecting Threads & Irregular Letter Forms

4

We selected color palette from AI-generated exports using Extract theme in Adobe Color.

5

We built upon the branding themes and concept experimentation from AI generations to design the web user experience.

6

We were delighted to discover that we could create a one-of-a-kind typeface using the additional data generated by the outpainting feature of DALL·E 2.

We also created a custom typeface named Off-kilter using the additional visuals from the DALL·E 2 image generation.

Development

7

We introduced a slight chromatic aberration on scroll to create a subtle out-of-tune/off-balance visual feeling that reflects the original concept experimentation AI imagery. The scrolling motion is emphasized by red beads that move down a twisting string, guiding your gaze through the content in a playful manner reminiscent of a child's bead maze table.

AI is going to make your job easier

Incorporating AI into your creative process is a little like riffing back and forth in a brainstorm session with a brain that’s not yours.

In this application, AI helped us generate multiple beautiful, intentional site designs and a lot of unintentionally hilarious copy. But as the project progressed, I found myself using it on other projects, throughout my process. As a designer, the most remarkable advantage is probably the speed and ease of iteration, and the acceleration of the experimental proof-of-concept phase. Forms and textures that were difficult or time consuming to create take a matter of seconds. And equally as valuable as getting to good ideas faster was recognizing and abandoning concepts that just weren’t working.

Ultimately, this project (and its temporary takeover of our homepage and social feeds) was a creative vehicle through which to share our perspective on the incredible potential of AI as an addition to the creative process — and its severe limitations as a replacement for human creativity.

The site has reverted to its original form, but there’s no going back for me.

MidJourney: Trophy statue of man working on computer, trophy marble gold, wearing clothes, 4k, photorealistic, 50mm lens, realistic --v 4