We are a group of creative people who help organizations make their ideas beautiful.

Above Par-adowski: WebXR Mini-Golf in 40 Hours or Less

The company putt-putt tournament takes to “the metaverse”

Paradowski Creative, the ad agency and tech firm where I’ve served as director of emerging technology for three years, is in the thick of its most ambitious, most technically-complex virtual reality project ever, working on behalf of a Fortune 50 client on a game-changing new product line. Our teams of designers, 3D artists and developers have full schedules addressing the myriad creative, technical and business objectives before us.

This is not the story of that project.

Rather, this is the story of how, in the midst of that, our creative team came forward with a proposition. That is, this calendar notification appeared, which sounds like the title of a meeting The Joker would set with Batman:

I’m told our agency will hold its first-annual Mini Masters putt putt tournament on April 13, 2022, where our oft-remote teams will gather in person and on premises, many for the first time in years, to compete for glory and an ill-fitting green sports coat on improvised mini-links hastily constructed throughout our St. Louis office. There will be a drink cart. I’m in, obviously. But what does the tech team have to do with it?

The ask:

Could one of the holes be in VR?

You can make anything you want.

We will give you no notes.

It’s in like, two weeks.

As the first line of defense of our tech team’s time and energy, I’d usually scoff. We’re already busy, and even the simplest VR game idea can conceal oceans of hidden complexity roiling beneath single-paragraph design briefs and key art, to say nothing of the two-week timeline.

And yet… “Anything you want” and “no notes” — this holds appeal. Further, think of putt-putt: it’s highly spatial, taking advantage of VR’s strengths as a medium — but the entire game loop consists of three-ish simple, slow physics interactions. It could be doable, even fun. Maybe.

I consider the other axes we have to grind: our teams are proponents of the 3D open web, which is the only real analog to the much-hyped “metaverse.” Unbeknownst to most, the modern web browser is a fully-functional virtual reality platform thanks to the now-widely-adopted WebXR spec. Although a handful of performance bottlenecks still plague this distribution channel, they shouldn’t be a problem for our project specifically. There’s real opportunity here to show off what WebXR can do, and how fast.

A number turns into a question: 40 hours — how far could we take this in 40 working hours? We can squeeze that in, surely.

I remain non-committal in front of my colleagues for now in case things don’t go as planned— but work begins immediately.

As WebXR and the larger 3D open web explode in utility and popularity, Ada Rose Cannon is a leader in the field. Co-chair of the W3C Immersive Web Groups and frequent open source prototyper, she’s developed and written about several AR/VR starter projects that come with useful base components like complex object grabbing behavior, “magnet helpers,” and even hand-tracking pose estimation.

AFrame's animation system is really powerful.

— Ada Rose Cannon (@AdaRoseCannon) March 12, 2022

When you let go of the objects they now animate back to their start position and I didn't need to write any extra code for it

I added an animation with an undefined 'from' position that starts whenever the put-down event is fired pic.twitter.com/MzA85ClxnM

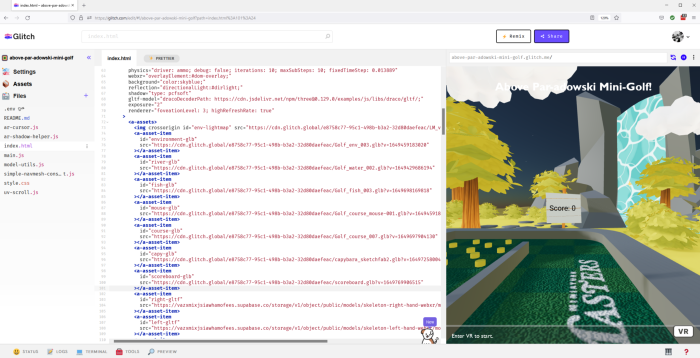

Ada often publishes her work on Glitch, a browser-based development platform which combines a code editor and asset manager with instant live deploys, removing much of the overhead cost of hosting and distribution for web apps and games. Code and asset changes are reflected in production instantly, and I can test new iterations in my headset, mobile or desktop device simply by refreshing the browser. I love the idea that not only can entire VR games run in a web browser, we can even develop and host them there. Oh, and it’s free. Okay, I’m sold.

A preview panel to the right will hot-reload whenever changes are made in the code editor on the left.

What you’re seeing in the Glitch editor is A-Frame code, which for years has remained the easiest way for web developers to start making WebXR-compliant virtual reality in the browser. It’s a library that wraps around three.js/WebGL rendering and attempts to partially represent 3D scenes as traditional browser DOM nodes resembling HTML syntax. Further, its JavaScript entity-component system has lifecycle hooks that allow for robust game scripting similar to what Unity or Unreal game engines offer. Lastly, A-Frame supports a diverse array of VR and AR devices, including my target VR headset, the Meta Quest 2 and its default browser. Our team has deep experience working with A-Frame (often through the networked lens of Mozilla Hubs), making it a great match for our project goals here.

With that, we have our tech stack decided-on and many useful components pre-packaged and ready-to-go. So what are we making?

Here’s the basic game loop:

1. Grab the club with a VR controller

2. Iterate score on club-to-ball contact

3. Simulate forces of club-to-ball and ball-to-ground contact, plus ball momentum, friction, restitution (“bounciness”), etc

4. The game loop ends when the ball comes into contact with the hole

You‘ll notice this is mostly physics-based — that is, moving bodies with mass interacting with each other in a “realistic” way. Physics simulation is an incredibly complex problem, but fortunately, A-Frame has a physics system based on ammo.js that will handle our use case nicely: the ammo-shape component can generate a collider from the golf course mesh automatically, while the club and ball are easily represented as a hull and sphere primitives.

There’s even a great visual debug mode —toggling <a-scene physics="driver:ammo;debug:true;"> yields these helpful visuals:

We’re able to derive static physics meshes from whatever 3D course model mesh is passed in.

In under a day of dev time, the basic game loop is up and running.

Prototyping mini-golf mechanics & physics on Quest 2. I used @AdaRoseCannon's @aframevr #WebXR starter project on @glitch to iterate fast - bonkers you can dev, test & distribute entirely in the browser

— jamesckane (@jamesckane) March 30, 2022

Here's Ada's code (posting mine when finished): https://t.co/5YwqkGFrt1 pic.twitter.com/tWwqxsvECu

With 30-ish hours left in my imaginary 40-hour budget, we’ve plenty of time to bring in the great AJ Johri, one of our top 3D technical artists and a master at producing highly-optimized, beautiful 3D assets for the web using the open source Blender suite. I knew AJ would have something up his sleeve that would show off his unique style while working in elements of event branding:

“When this project was hinted at in one of our team’s stand-up meetings, I started thinking about how fun the space could be and all of the cool things we could add to it. Stylized art is my forte, and once I found out that there were practically no limitations on what the design could be, I knew this was my chance to play to my strength! We have a profound fondness towards animals at our agency, so a wildlife sanctuary-like space made perfect sense as a setting. Having done plenty of research and tests towards creating heavily optimized assets for different prior client projects, the process of creating this small forest like space with a lot of animated elements was much quicker than I anticipated. With a combined file size of just under 4mb, I’m personally really happy with how all of the assets used in the game turned out while utilizing very little video memory.”

— AJ Johri, 3D Technical Artist

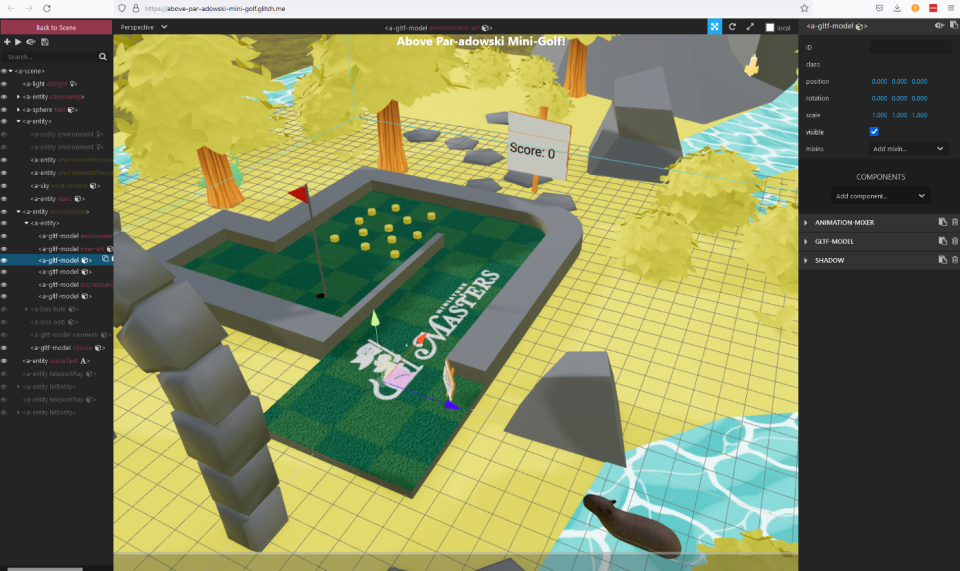

AJ’s art through the lens of A-Frame’s “inspector mode,” which offers another Unity-like way to view and manipulate your scene and its components. The noble capybara was added in tribute to AJ’s great work.

Next we turned to improving gameplay, fixing bugs and tuning physics. There are few people I’d rather collaborate with than our immersive tech lead, Ethan Michalicek, and once I shared the Glitch project we were able to simultaneously edit project code remotely just as if it were a Google Doc.

True to form, Ethan quickly analyzed and resolved several bugs and UX issues. The chief problem, predictably, was the physics. Our default implementation worked, but just barely. For example, the ball would completely stop when it hit a surface rather than bouncing off, and clipping through the course walls (or worse, the floor) was common if the ball was moving too quickly or if our framerate dropped for any reason. Ethan breaks down how he approached the problem:

“One of the biggest challenges when rapidly prototyping any physics-based interactive experience is solving for edge cases caused either by unexpected interactions or instability within the physics system. With a catch-all already in place for the former problem, the only thing left to do was tune the physics to work as well as possible, as often as possible.

“Unfortunately, because this experience runs in a browser and at somewhat limited framerates, this wasn’t easy. For starters, I increased the collision margins on everything in order to prevent ‘quantum tunneling’ (basically, physics objects phasing through each other). We increased the physical size of the course boundaries as well to alleviate the ball clipping through the walls. Finally, I also enabled CCD — continuous collision detection, which trades a little bit of performance for collision detection between frames.

“But collisions weren’t the only problems that sprang up. Perhaps the trickiest one to solve was simply to get the ball to stop rolling. While simply turning up the drag and the friction seems like the one-stop solution, the physics library we ended up using failed to slow the ball further when friction and drag values were low (I assume due to some floating-point rounding error). Instead, I added a supplementary check on the ball every tick to see if it had fallen below a certain speed, and then after that, gradually reduced its velocity each tick until it came to a stop.” — Ethan Michalicek, Immersive Tech Lead

In no time the Mini Masters was upon us as (physical, corporeal) mini-links were established across our office, with several outdoor holes being moved indoors last-minute due to inclement weather. A work of staggering creative genius in its own right, the design and production of the Mini Masters is itself well-deserving of a blog post such as this — but for the sake of brevity, we’ll remain focused on our VR game aside from this quick vibe check:

The logistics of running public VR demos for large crowds can be challenging. The importance of one-to-one instruction for new VR users can’t be overstated, as first impressions of VR headsets vary widely. Some are scared to move an inch, while others over-confidently charge across the room, heedless of furniture or walls. But I was pleased to see experienced VR users among the group easily grasp the controls and play through without much explanation at all. Being able to see the user’s point-of-view in VR projected on the wall via Chromecast was critical to helping everyone, and keeping the crowd engaged.

Getting a good, tight fit on the Quest 2 headstrap remained persistent challenge for many. Glasses and some hairstyles don’t always neatly fit into the headset, either. And, of course, sanitization of the silicone face pad, controllers and strap after every single use is more important than ever.

We cleared space, set up the Guardian fence, and used a Chromecast to stream the VR user’s point of view to a projector for the whole crowd to see.

We opted for a single-controller input style, which let me demonstrate proper controller use with the off-hand. In a production setting, much of this instruction would be automated and tutorialized within the game itself.

All in all it was a big success. Some users were frustrated by the experience at times, which stands to reason. But I was happy with how many people stepped in, understood the game and mechanics intuitively, and quickly shot a three or four, pumping their fists when they made it. One creative director even shot a two with a smart bank—the room erupted — shoutout Josh Chavis! Everyone else was awarded a five for playing hard and good effort.

To be fair, here’s an incomplete list of what 40 hours of WebXR dev doesn’t buy you:

Creative edits: I rubber stamped AJ’s art, and the Mini Masters team rubber stamped the entire project. We made a few concessions in course design, but otherwise basically yes-anded the whole thing.

UX testing: approximately five people tested this app before it went live — none of whom were left-handed, we later realized. That is… not ideal.

Input and UI: I mean, it’s bad, and basically requires someone standing there explaining how to play for all but the most advanced/familiar VR users. Tutorialization of gameplay is a step even the simplest VR activation should plan for.

Completely robust optimization & physics: for all our tuning, physics remains susceptible to errors when framerate falters —and it didn’t help that we were using some processing headroom to run Chromecast screen-sharing. In a native VR app context, this wouldn’t be as much of a problem.

Solves for all known bugs: the teleport is slightly offset in a way we couldn’t debug on short notice — which is, admittedly, wildly disorienting. Also, grabbing the club with the wrong controller makes the club disappear and… yeah, I don’t know why.

But to reiterate, for the effort and time we put in and the fun we had, we’re incredibly happy with and proud of this project. This was a great way to show off the possibilities of WebXR and take part in company gatherings in a fun, creative, and even efficient way. I am not sure we could be more on-trend:

In the spirit of Ada Rose Cannon’s original starter project, Paradowski Creative is also happy to open source “Above Par-adowski Mini-Golf” code and assets on Glitch, which is available to play, view and remix now at:

James C. Kane is Director of Emerging Technology at Paradowski Creative— currently focused on the intersection of spatial computing, machine learning, and web/app development.

In this game, you can pet the capybara.